A distributed cache is like a shared memory storage spread across multiple computers in a network. It helps speed up data access by storing frequently used information closer to where it's needed, reducing the need to fetch it from a central database, making applications faster and more responsive.

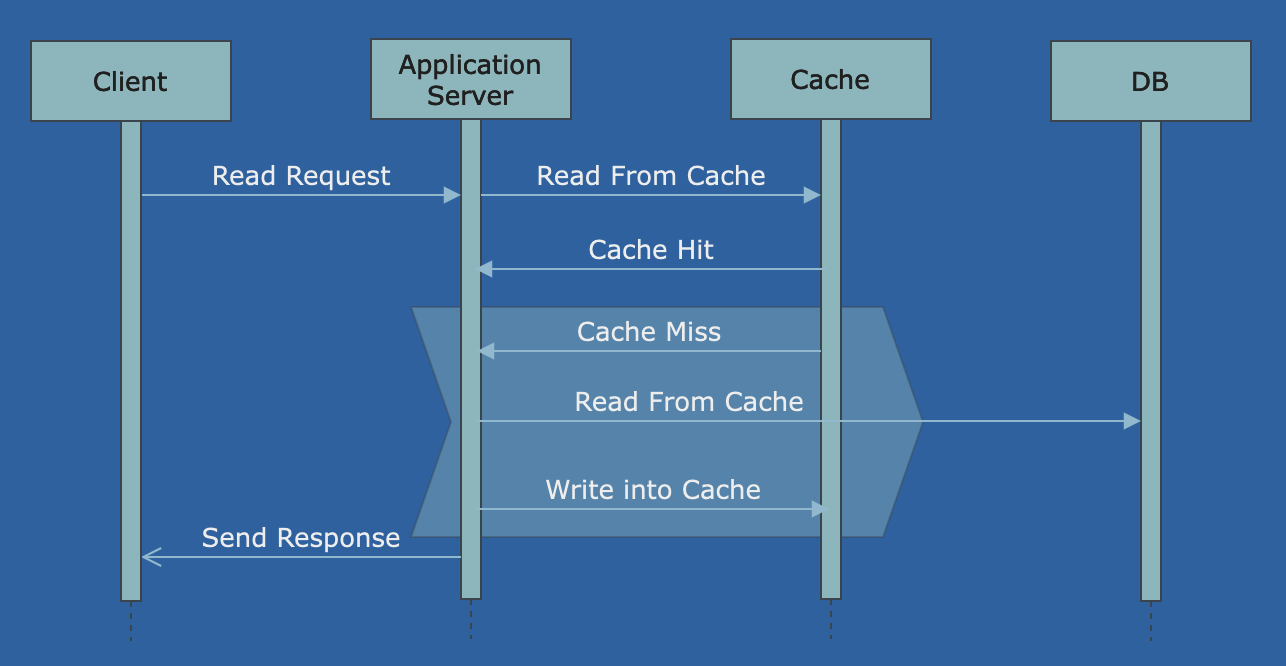

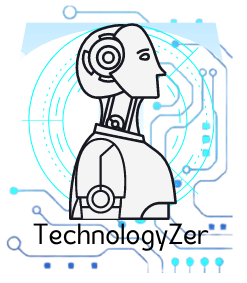

- Cache Aside

- The application first checks the cache

- If data is found in the cache, it's a cache hit and data is returned to the client.

- If data is not found in the cache, it's cache miss. The application fetches the data from DB, stores it in the cache and data is returned to the client.

Pros:

- A good approach for heavy read applications.

- Even if the cache is down, the request will not fail as it will fetch the data from DB.

- Cache document data structure can be different than the data present in DB.

Cons:

- For new data read, there will always be cache miss first.

- There is a chance of inconsistency between cache and DB if we do not use appropriate caching during write operation.

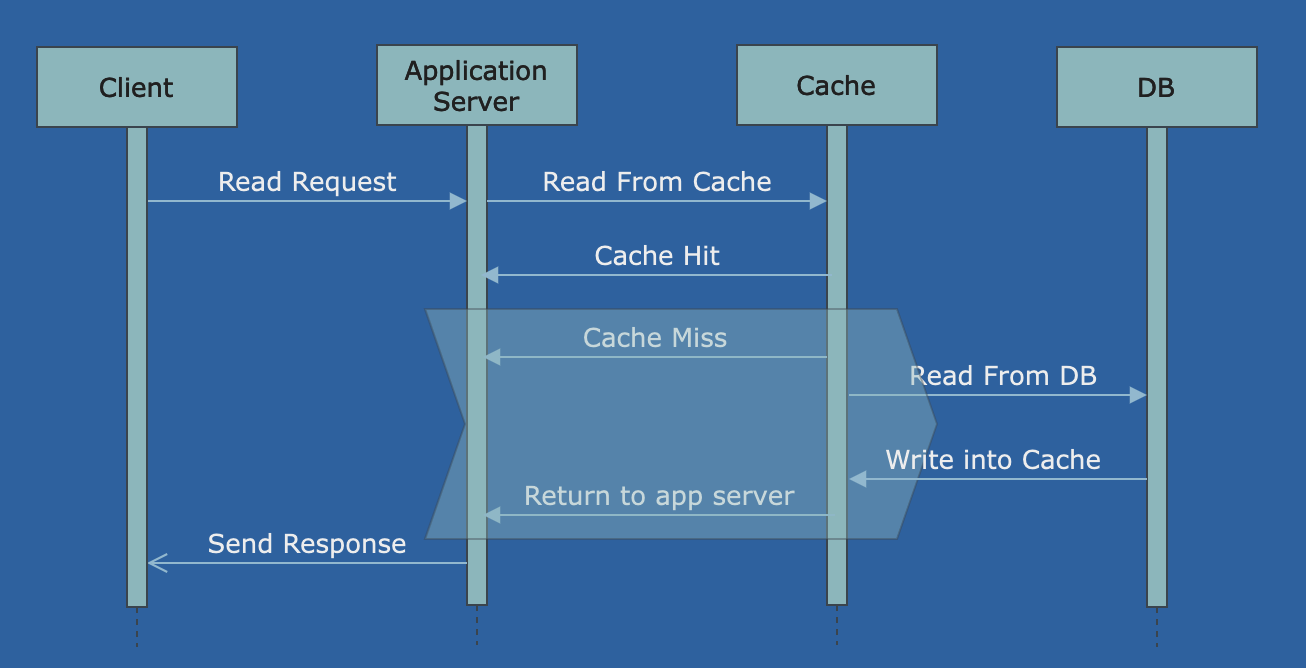

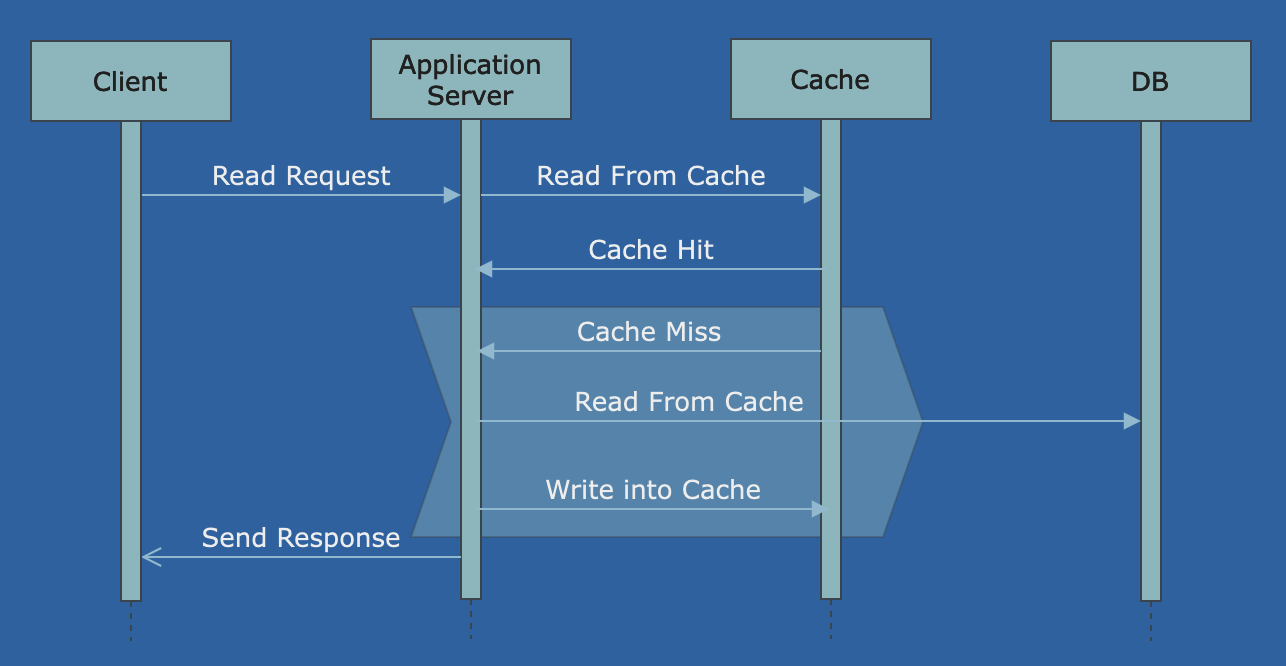

- Read Through Cache

- The application first checks the cache

- If data is found in the cache, it's a cache hit and data is returned to the client.

- If data is not found in the cache, it's cache miss. Cache fetches the data from DB, stores it back to the cache and data is returned to the application server.

Pros:

- A good approach for heavy read applications.

- Logic of fetching data from DB and updating cache is not inside the app server.

Cons:

- For new data read, there will always be cache miss first.

- There is a chance of inconsistency between cache and DB if we do not use appropriate caching during write operation.

- Cache document data structure should be same as the DB table.

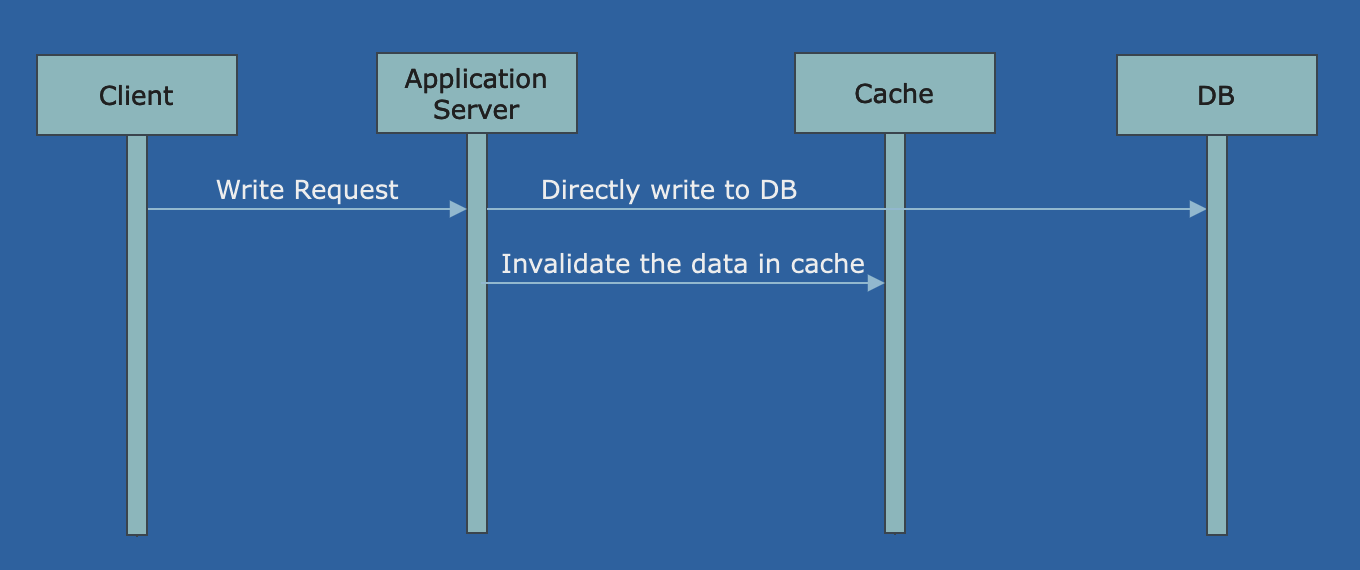

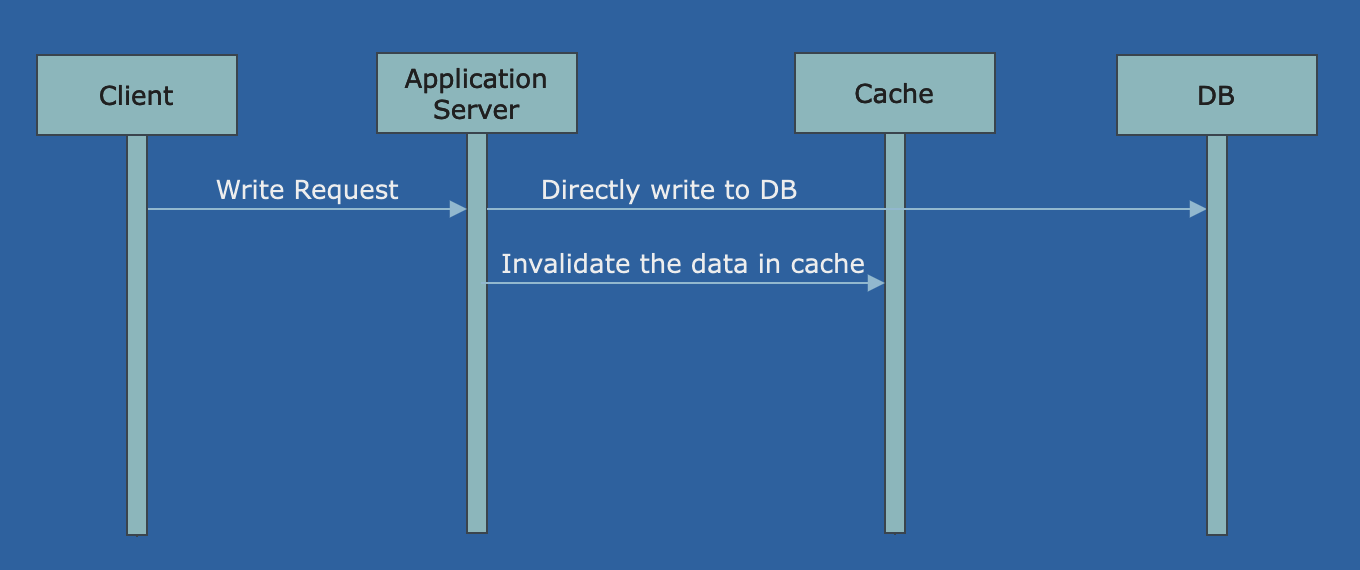

- Write Around Cache

- Directly writes data into the DB.

- It does not update the data in the cache, it invalidates the data.

Pros:

- A good approach for heavy read applications.

- Resolves inconsistency problem between cache and DB.

Cons:

- For new data read, there will always be cache miss first.

- If DB is down write operation will fail.

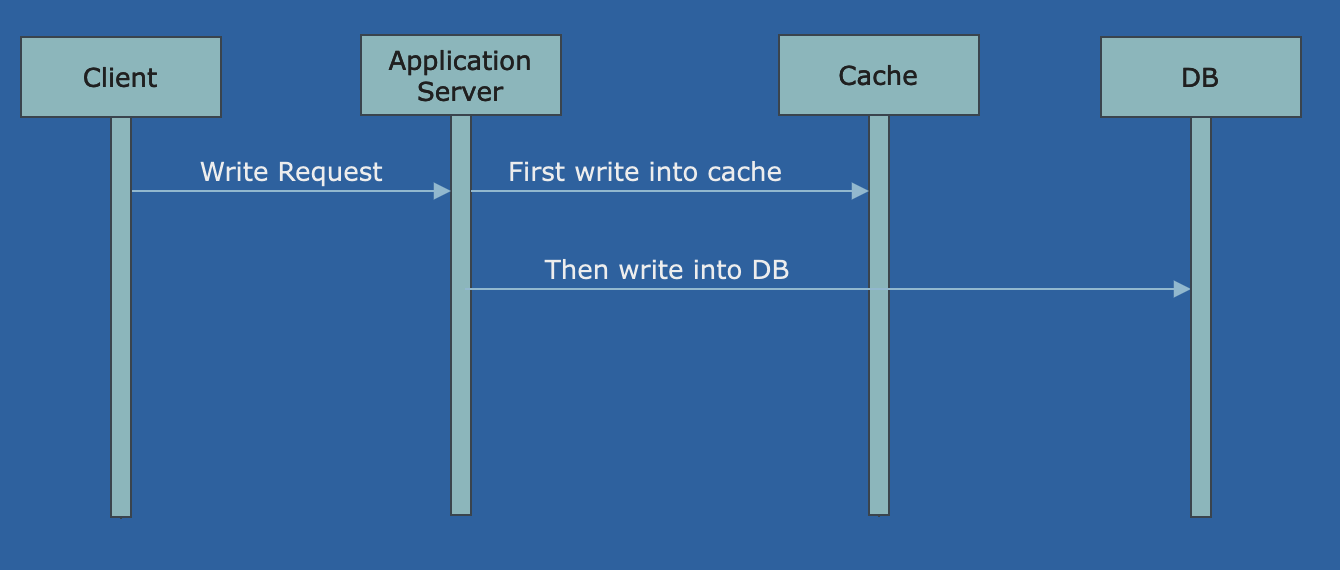

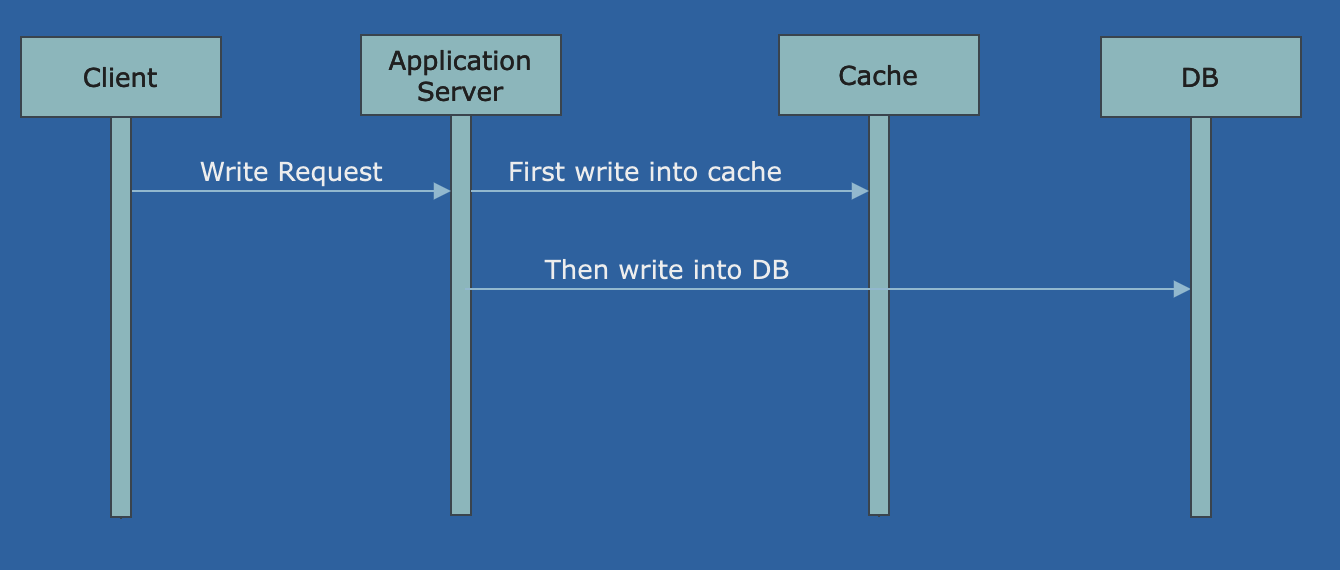

- Write Through Cache

- First, write data into the Cache.

- Then synchronously write data into DB.

Pros:

- Cache and DB always remain consistent.

- Cache hit chance increases a lot.

Cons:

- It requires some caching technique for read (Cache Aside/Read through). Alone It's not useful as it increases latency for write operation.

- 2 phase commit is required to maintain transactional property. If both cache and DB write successful then the transaction is successful otherwise we need to roll back the transaction.

- If DB is down write operation will fail.

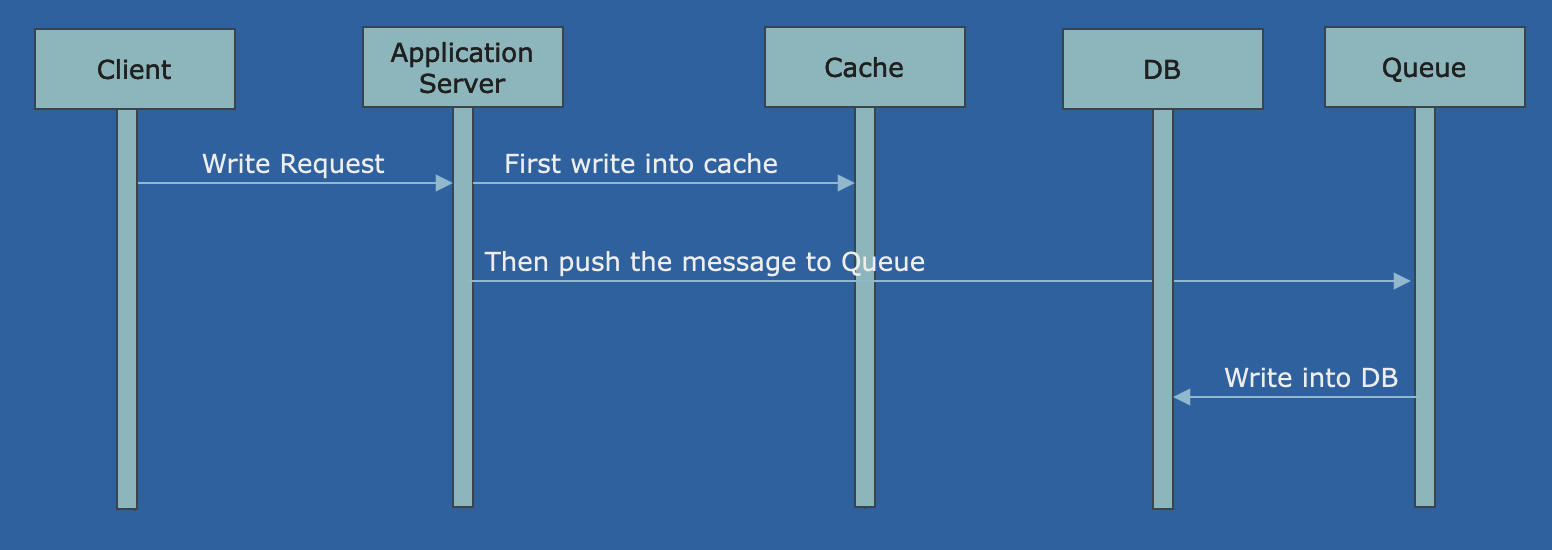

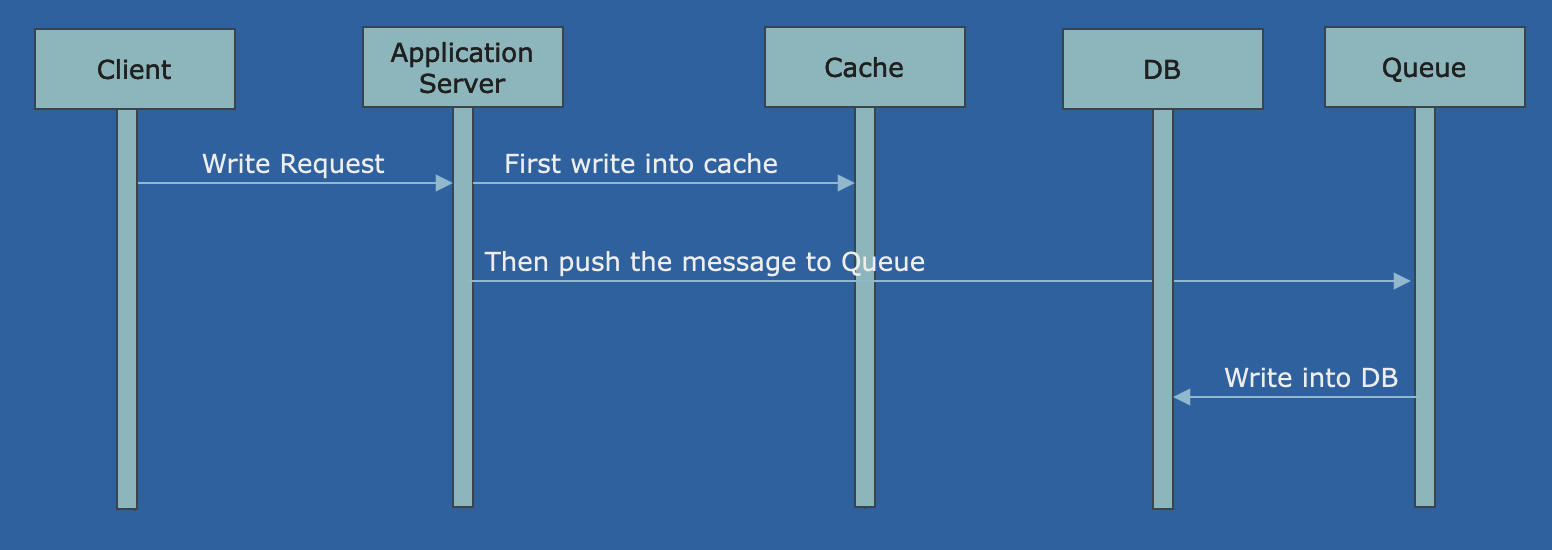

- Write Back (Write Behind) Cache

- First, write data into the Cache.

- Then Asynchronously write data into DB.

Pros:

- Good for write heavy applications.

- Improves the write operation latency as writing into DB happens asynchronously.

- Cache hit chance increases a lot.

- Gives much better performance when it is used with Read Through cache.

- Even when DB failure happens, write operation still works.

Cons:

- If data is removed from the cache and DB writes still do not happen, then there is a chance of an issue. (It is handled by keeping TTL of cache a little higher)